That cable management is horrendous. Pull them out.

But it’s the spaghetti cabling that makes it work and highly robust.

There is an eerie resemblence between the smallest neuron and the largest structure in the universe - Galaxy Filament

Noam Chomsky said “we don’t know what happens when you cram 10^5 neurons* into a space the size of a basketball” - but what little we know is astonishing & a marvel

*whatever the number is

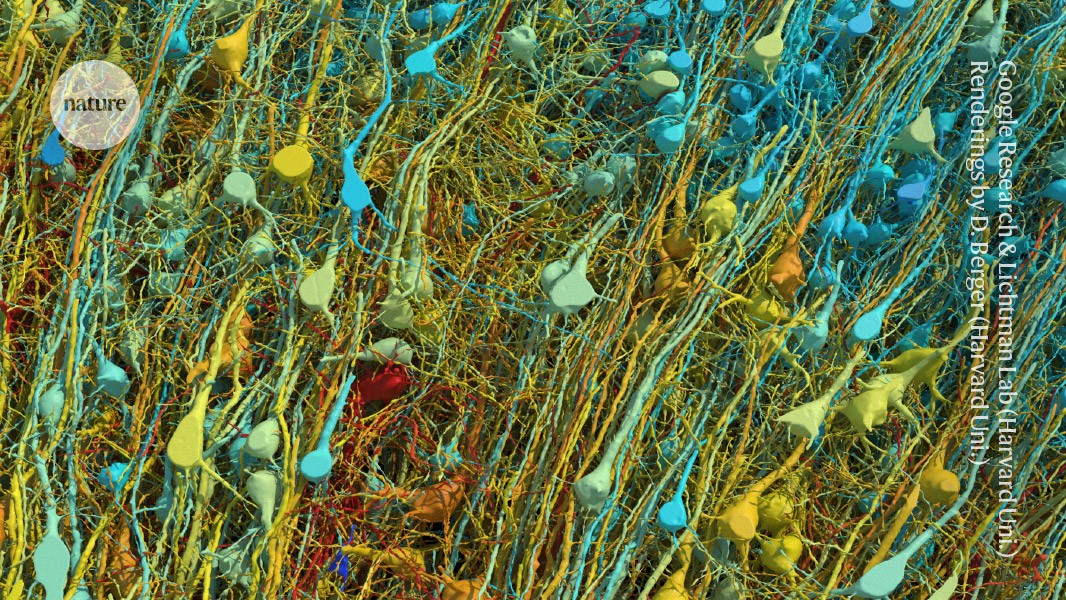

The 3D map covers a volume of about one cubic millimetre, one-millionth of a whole brain, and contains roughly 57,000 cells and 150 million synapses — the connections between neurons. It incorporates a colossal 1.4 petabytes of data.

Assuming this means the total data of the map is 1.4 petabytes. Crazy to think that mapping of the entire brain will probably happen within the next century.

If one millionth of the brain is 1.4 petabytes, the whole brain would take 1.4 zettabytes of storage, roughly 4% of all the digital data on Earth.

This is exactly what I’m talking about when I argue with people who insist that an LLM is super complex and totally is a thinking machine just like us.

It’s nowhere near the complexity of the human brain. We are several orders of magnitude more complex than the largest LLMs, and our complexity changes with each pulse of thought.

The brain is amazing. This is such a cool image.

I think of LLMs like digital bugs, doing their thing, basically programmed.

They’re just programmed with virtual life experience instead of a traditional programmer.

Back in the early 2000s CERN was able to simulate the brain of a flat worm. Actually simulate the individual neurons firing. A 100% digital representation of a flatworm brain. And it took up an immense amount of processing capacity for a form of life that basic, far more processor intensive than the most advanced AIs we currently have.

Modern AIs don’t bother to simulate brains, they do something completely different. So you really can’t compare them to anything organic.

https://www.sciencealert.com/scientists-put-worm-brain-in-lego-robot-openworm-connectome

2014, not early 2000s (unless you were talking about the century or something).

OpenWorm project, not CERN.

And it was run on Lego Mindstorm. I am no AI expert, but I am fairly certain that it is not “far more processor intensive than the most advanced AIs we currently have”.

Citation needed on that comment of yours. Because I know for a fact that what I said is true. Go look it up.

Maybe you should be a little less sure of your “facts”, and listen to what the world has to teach you. It can be marvelous.

LLM’S don’t work like the human brain, you are comparing apples to suspension bridges.

The human brain works by the series of interconnected nodes and complex chemical interactions, LLM’s work on multi-dimensional search spaces, their brains exist in 15 billion spatial dimensions. Yours doesn’t, you can’t compare the two and come up with any kind of meaningful comparison. All you can do is challenge it against human level tasks and see how it stacks up. You can’t estimate it from complexity.

I mean you can model a neuronal activation numerically, and in that sense human brains are remarkably similar to hyper dimensional spatial computing devices. They’re arguably higher dimensional since they don’t just integrate over strength of input but physical space and time as well.

LLM’s work on multi-dimensional search spaces

You’re missing half of it. The data cube is just for storing and finding weights. Those weights are then loaded into the nodes of a neural network to do the actual work. The neural network was inspired by actual brains.

I wonder where it got it’s name from?

I have no idea. Maybe someone with a larger neural network than mine can figure it out.

Aha! This is why I can’t think straight! Spaghetti!

Incredible. Very humbling

Humbling? That’s going on in my head. I’m that complicated! Or at least the “hardware” I run on is. I think having a brain that beautifully complex is more empowering than anything! I wonder what new discoveries will stem from this.

Por que no los dos?

I can see both sides:

Super humbling because nature’s complexity can provide data storage and retrieval capacity several orders or magnitude greater than the best we can do right now.

Also super exciting because look at what every brain on the planet is composed of, and how it functions, in a freakin’ square millimeter!

Crazy stuff. Wild.

I thought this was a close up of a fuzzy sweater and was like: "cool ". Read the title. “Oh, fuck, yeah.”