On Feb 14th we migrated Lemmy from its standalone Docker setup to the same Kubernetes cluster operating furry.engineer and pawb.fun, discussed in https://pawb.social/post/6591445.

As of 5:09 PM MT on Feb 14th, we are still transferring the media to the new storage, which may result in broken images. Please do still reply to this thread if your issue is media related, but please check again after a few hours and edit your comment to say “resolved” if it’s rectified by the transfer.

As of 11:02 AM MT on Feb 15th, we have migrated all media and are awaiting the media service coming back online and performing a hash check of all files. Once this is completed, uploads should work per normal.

To make it easier for us to go through your issues, please include the following information:

- Time / Date Occurred

- Page URL where you encountered the issue

- What you were trying to do at the time you encountered the issue

- Any other info you think might be important / relevant

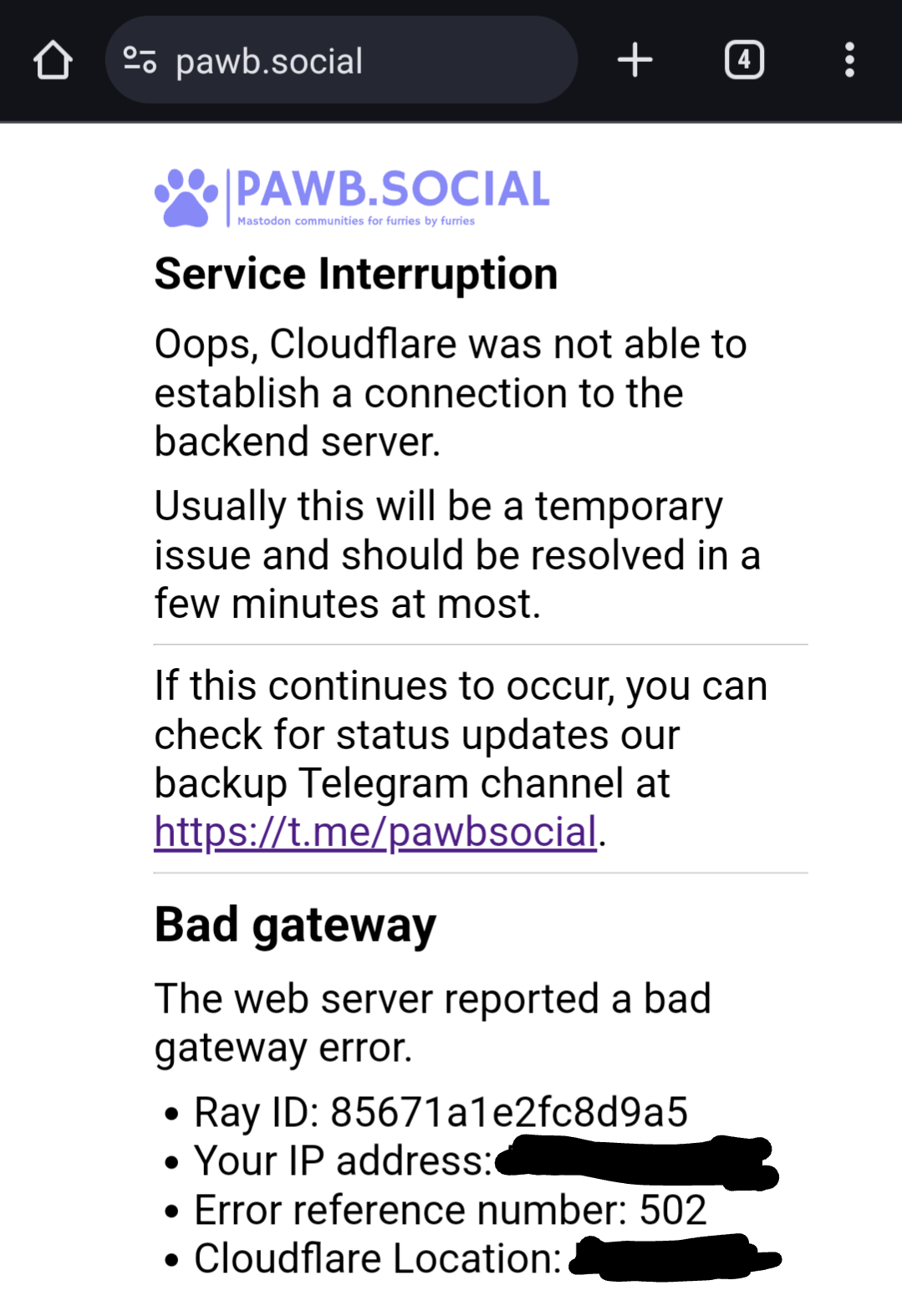

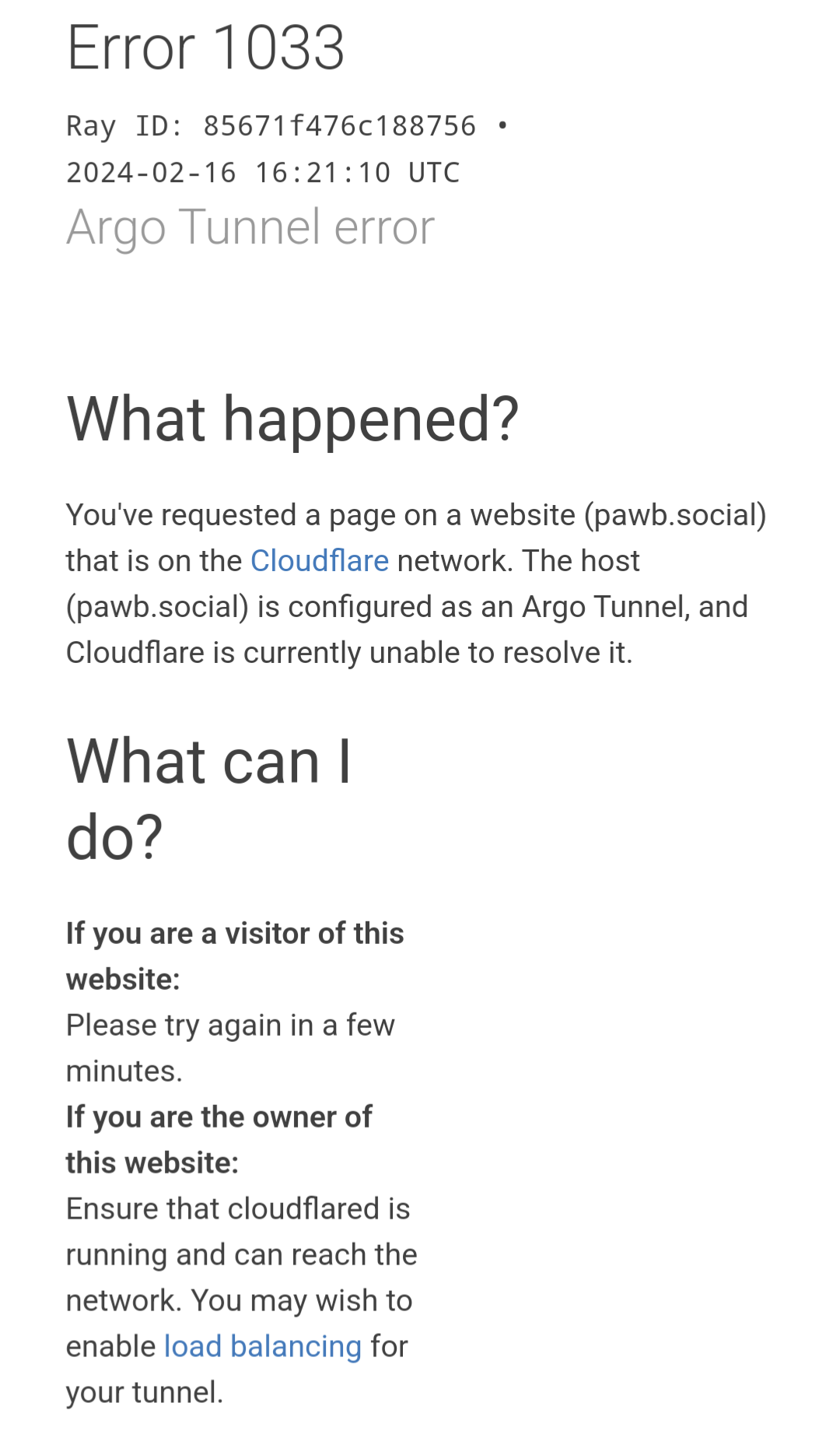

I’m getting intermittent issues from Cloudflare. Seems to start off as a 502, then becomes a 530.

I’m also experiencing this, Cloudflare issues are periodically preventing access and Voyager for Lemmy is unable to load any content signed in through pawb periodically. This has occurred 4 times in the past 2 days, haven’t been checking much beyond that but it periodically begins working again.

@Kovukono@pawb.social @name_NULL111653@pawb.social @liquidparasyte@pawb.social Does this still appear to be occurring for you? We were having technical issues over the past day but they appear to be resolved now.

I haven’t been hitting Lemmy that much, but it hasn’t happened since I posted.

Seems to be fine-ish now. Now I’m just getting Thunder specific bugs 🥴

Edit:maybe not. 502 error yet submits bug still here. I dunno

When it next occurs, could you email network@pawb.social and attach a screenshot of the error page? If it happens in an app, could you let me know what action you’re trying to do and I’ll see if I can pin it down in the logs! :3

It looks like federation with feddit.de is broken since the migration.

- 16.02.24 / 07:56 GMT

- https://pawb.social/c/europe@feddit.de (Compare to https://feddit.de/c/europe)

Edit:Works now!

deleted by creator

Can also concur that I’m getting 502 errors when posting from a Lemmy client (Thunder) but the post goes through anyway, which I never realized until refreshing the page

I’m getting issues with External emojis not loading on profiles or posts. Emojis from servers outside of Pawb.fun show up as text and glitch very tiny when hovered over.

I was scrolling through the homepage and noticed that external emojis on remote posts aren’t working correctly.

I’ve noticed the issue since shortly after the migration and I reported on the issue here

Time Encountered: 22:27 UTC (Recent, originally was after migration)

URL: https://pawb.fun/@pyrox@pyrox.dev (URL featuring the occurrence, actual occurrence was on Homepage)

We’re aware of this issue and are working to resolve it. Yesterday, the last of the files from Cloudflare R2 transferred and we’re working on getting the files backed up before removing unnecessary files, and restoring the missing files to the local storage. We’re needing to do the removal of unnecessary files as there are ~6TB of stored media, but only 1TB of used media between furry.engineer and pawb.fun.

Any updates on this? Just curious since it was over two weeks ago and the emoji bug is still present. They seem to be fixed in furry.engineer, but pawb.fun still shows glitched or blank emojis. I hope I’m not being annoying by asking again.

Apologies for the delay. We’re working on this over the course of the weekend trying to restore the emojis.

I dunno if this is related, but I don’t think we can post to any other servers right now. Tried on beehaw and lemmy.world and got the same “cannot load post” error.

Is this working again? We were experiencing some networking issues, but they appear to be resolved (https://phiresky.github.io/lemmy-federation-state/site?domain=pawb.social).

Edit: Does appear that we’re lagging a little on out-going federation activities due to the volume of incoming requests. I’ll look into setting up horizontal scaling for Lemmy in the coming days once we’ve finished setting up the final node in the Kubernetes cluster! We’re currently hamstrung for resources on the two servers we have.

Still getting some weirdness but I assume it will be fixed in the coming days, thanks Crashdoom :)