I have been thinking about self-hosting my personal photos on my linux server. After the recent backdoor was detected I’m more hesitant to do so especially because i’m no security expert and don’t have the time and knowledge to audit my server. All I’ve done so far is disabling password logins and changing the ssh port. I’m wondering if there are more backdoors and if new ones are made I can’t respond in time. Appreciate your thoughts on this for an ordinary user.

“We don’t” is the short answer. It’s unfortunate, but true.

How do you know there isn’t a logic bug that spills server secrets through an uninitialized buffer? How do you know there isn’t an enterprise login token signing key that accidentally works for any account in-or-out of that enterprise (hard mode: logging costs more than your org makes all year)? How do you know that your processor doesn’t leak information across security contexts? How do you know that your NAS appliance doesn’t have a master login?

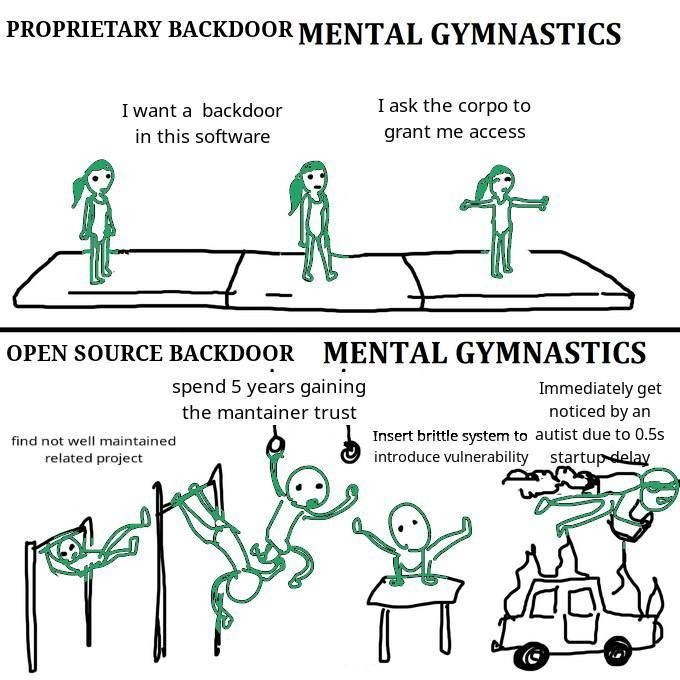

This was a really, really close one that was averted by two things. A total fucking nerd looked way too hard into a trivial performance problem, and saw something a bit hinky. And, just as importantly, the systemd devs had no idea that anything was going on, but somebody got an itchy feeling about the size of systemd’s dependencies and decided to clean it up. This completely blew up the attacker’s timetable. Jia Tan had to ship too fast, with code that wasn’t quite bulletproof (5.6.0 is what was detected, 5.6.1 would have gotten away with it).

In the coming weeks, you will know if this attacker recycled any techniques in other attacks. People have furiously ripped this attack apart, and are on the hunt for anything else like it out there. If Jia has other naughty projects out here and didn’t make them 100% from scratch, everything is going to get burned.

I think the best assurance is - even spies have to obey certain realities about what they do. Developing this backdoor costs money and manpower (but we don’t care about the money, we can just print more lol). If you’re a spy, you want to know somebody else’s secrets. But what you really want, what makes those secrets really valuable, is if the other guy thinks that their secret is still a secret. You can use this tool too much, and at some point it’s going to “break”. It’s going to get caught in the act, or somebody is going to connect enough dots to realize that their software is acting wrong, or some other spying-operational failure. Unlike any other piece of software, this espionage software wears out. If you keep on using it until it “breaks”, you don’t just lose the ability to steal future secrets. Anybody that you already stole secrets from gets to find out that “their secrets are no longer secret”, too.

Anyways, I think that the “I know, and you don’t know that I know” aspect of espionage is one of those things that makes spooks, even when they have a God Exploit, be very cautious about where they use it. So, this isn’t the sort of thing that you’re likely to see.

What you will see is the “commercial” world of cyberattacks, which is just an endless deluge of cryptolockers until the end of time.

Self hosting personal photos doesn’t generally require opening anything up to the internet, so most backdoors would not be accessible by anyone but you.

Or someone who has penetrated your network.

Of which the chances are slim to none for 99% of people simply because they aren’t interesting enough to be a target beyond phishing, scans, and broad attacks.

You don’t. Hackers often exploit things like this for ages before they are found. Every bit of non simple software also has bugs.

But chances are you won’t be the target.

Just keep everything updated and you should be alright.

And implement least privilege and automatic updates

Cheeky answer:

Actual answer:

Theoretically anyway, open source software’s guarantee of “no backdoor” is that the code is auditable, and you could study it and know if it has any holes and where. Of course, that presumes that you have the knowledge AND time to actually go and study thousands of lines of code. Unrealistic.

Slightly less guaranteed but still good enough to calm my mind, is the idea that there is a whole-ass community of people who do know their shit and who are constantly checking this.Do note that like. Closed source software is known to be backdoored, only, the backdoors are mostly meant for either the owners of the software (check the fine print folks) or worse, the governments.

The biggest thing that you should note is that: It is unlikely that you (or I or most of the people here) are interesting enough that anyone will actually exploit those vulnerabilities to personally fuck you over. Your photos aren’t interesting enough except as part of a mass database (which is why Google/Facebook want them). Same for your personal work data and shit.

Unless those backdoors could be used to turn your machine into a zombie for some money-making scheme (crypto or whatever) OR you’re connected to people in power OR you personally piss off someone who is a hacker – it is very unlikely you’ll get screwed over due to those vulnerabilities :P

Just a point to add: this backdoor was (likely) planned years in advance; it took ONE guy a couple weeks (after the malicious code was released) to find it because he had nothing else going on that evening.

I’m relatively confident that the FOSS community has enough of that type of person that if there are more incidents like this one, there’s a decent chance it’ll be found quickly, especially now that this has happened and gotten so much attention.

Even better: there are also backdoors in closed-source software that will never be found. There may be fewer backdoors inserted, but the ones that get in there are far more likely to stay undiscovered.

That’s the neat part, we don’t!

…but, we at least can have a shot of finding them.

In the meantime, I’d advise you to keep an eye out and maybe look into threat models. As people said in this thread already, bad actors probably don’t care about your personal photos.

Unless OP is a celebrity or politician. Or knows they have an enemy with the resources to find and exploit potential backdoors.

I would say you can’t, but if you are using open source software, then somebody can and will find them eventually and they will be patched. Unlike with closed source software, you will never know if it has a backdoor or not. This whole episode shows both the problems with open source, being lack of funding for security audits, and the beauty of open source, being that eventually it will be detected and removed.

We don’t.

Use software with an active community, don’t install things you don’t need, update regularly, and be thankful that you probably aren’t worth using a zero-day backdoor on. Your telecom provider, on the other hand, might be - but there’s not much you can do about that!

I’m pretty sure most closed source software is already backdoored.

It’s a feature!

Afaik, most phones are backdoored that can be abused using tools like “pegasus” which led to a huge indignation in Hungary. I don’t belive PCs are exceptions. Intel ME is a proprietary software inside the CPU, often considered as a backdoor in Intel. AMD isn’t an exception. It’s even weirder that Intel produces chips with ME disabled for governments only.

They are not produced for governments only, almost every consumer-grade CPU can have its ME disabled or at least scuttled, thanks to efforts like me_cleaner!

Have you tried it?

We can’t know. If we would know, those weren’t undetected backdoors at all. It’s not possible to know something you don’t know. So the question in itself is a paradox. :D The question is not if there are backdoors, but if the most critical software is infected? At least what I ask myself.

Do you backups man, do not install too many stuff, do not trust everyone, use multiple mail accounts and passwords and 2 factor authentication. We can only try to minimize the effects of when something horrible happens. Maybe support the projects you like, so that more people can help and have more eyes on it. Governments and corporations with money could do that as well, if they care.

The main solace you can take is how quickly xz was caught: there is a lot of diverse scrutiny on it.

Hmm, not really. It’s only because it nerd-sniped someone who was trying to do something completely unrelated that this came to light. If that person has been less dedicated or less skilled we’d still probably be in the dark.

Call me names… But sometimes the story has far more branched backstories than they actually shed into light.

Trust nobody, not even yourself.

The thing is there are a few thousand of those people

Maybe millions of potential eyes, but all of them are looking at other things! Heartbleed existed for two years before being noticed, and OpenSSL must have enormously more scrutiny than small projects like xz.

I am very pro open source and this investigation would’ve been virtually impossible on Windows or Mac, but the many-eyes argument always struck me as more theoretical/optimistic than realistic.

Heartbleed existed for two years before being noticed

That’s a different scenario. That was an inadvertently introduced bug, not a deliberately installed backdoor. So the bad guys didn’t have two years to exploit it because they didn’t know about it either.

It’s also not new that very old bugs get discovered. Just a few years ago a 24 year old bug was discovered in the Linux kernel.

And are bugs harder to find than carefully hidden backdoors? No-one noticed the code being added and if it hadn’t have had a performance penalty then it probably wouldn’t have been discovered for a very long time, if ever.

The flip side to open-source is that bad actors could have reviewed the code, discovered Heartbleed and been quietly exploiting it without anyone knowing. Government agencies and criminal groups are known to horde zero-days.

Check the source or pay someone to do it.

If you’re using closed source software, its best to assume it has backdoors and there’s no way to check.

I do IT security for a living. It is quite complicated but not unrealistic for you to DIY.

Do a risk assessment first off - how important is your data to you and a hostile someone else? Outputs from the risk assessment might be fixing up backups first. Think about which data might be attractive to someone else and what you do not want to lose. Your photos are probably irreplaceable and your password spreadsheet should probably be a Keepass database. This is personal stuff, work out what is important.

After you’ve thought about what is important, then you start to look at technologies.

Decide how you need to access your data, when off site. I’ll give you a clue: VPN always until you feel proficient to expose your services directly on the internet. IPSEC or OpenVPN or whatevs.

After sorting all that out, why not look into monitoring?

Fun fact, you can use let’s encrypt certs on a internal environment. All you need is a domain.

Just be aware that its an information leakage (all your internal DNS names will be public)

…which shouldn’t be an issue in any way. For extra obscurity (and convenience) you can use wildcard certs, too.

Are wildcard certs supported by LE yet?

Have been for a long time. You just have to use the DNS validation. But you should do that (and it’s easy) if you want to manage “internal” domains anyway.

Oh, yeah, idk. Giving API access to a system to modify DNS is too risky. Or is there some provider you recommend with a granular API that only gives the keys permission to modify TXT and .well-known (eg so it can’t change SPF TXT records or, of course, any A records, etc)

What you can (and absolutely should) do is DNS delegation. On your main domain you delegate the

_acme-challenge.subdomains with NS records to your DNS server that will do cert generation (and cert generation only). You probably want to run Bind there (since it has decent and fast remote access for changing records and other existing solutions). You can still split it with separate keys into different zones (I would suggest one key per certificate, and splitting certificates by where/how they will be used).You don’t even need to allow remote access beyond the DNS responses if you don’t want to, and that server doesn’t have anything to do with anything else in your infrastructure.

Not if you setup a internal dns

How would that prevent this? To avoid cert errors, you must give the DNS name to let’s encrypt. And let’s encrypt will add it to their public CT log.

Sorry I though you were referring to IP leakage. Apologizes

I do use it quite a lot. The pfSense package for ACME can run scripts, which might use scp. Modern Windows boxes can run OpenSSH daemons and obviously, all Unix boxes can too. They all have systems like Task Scheduler or cron to pick up the certs and deploy them.