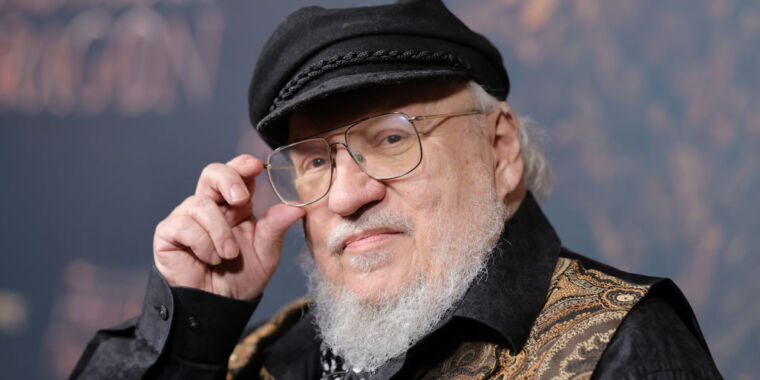

Yesterday, popular authors including John Grisham, Jonathan Franzen, George R.R. Martin, Jodi Picoult, and George Saunders joined the Authors Guild in suing OpenAI, alleging that training the company’s large language models (LLMs) used to power AI tools like ChatGPT on pirated versions of their books violates copyright laws and is “systematic theft on a mass scale.”

“Generative AI is a vast new field for Silicon Valley’s longstanding exploitation of content providers," Franzen said in a statement provided to Ars. "Authors should have the right to decide when their works are used to ‘train’ AI. If they choose to opt in, they should be appropriately compensated.”

OpenAI has previously argued against two lawsuits filed earlier this year by authors making similar claims that authors suing “misconceive the scope of copyright, failing to take into account the limitations and exceptions (including fair use) that properly leave room for innovations like the large language models now at the forefront of artificial intelligence.”

This latest complaint argued that OpenAI’s “LLMs endanger fiction writers’ ability to make a living, in that the LLMs allow anyone to generate—automatically and freely (or very cheaply)—texts that they would otherwise pay writers to create.”

Authors are also concerned that the LLMs fuel AI tools that “can spit out derivative works: material that is based on, mimics, summarizes, or paraphrases” their works, allegedly turning their works into “engines of” authors’ “own destruction” by harming the book market for them. Even worse, the complaint alleged, businesses are being built around opportunities to create allegedly derivative works:

Businesses are sprouting up to sell prompts that allow users to enter the world of an author’s books and create derivative stories within that world. For example, a business called Socialdraft offers long prompts that lead ChatGPT to engage in ‘conversations’ with popular fiction authors like Plaintiff Grisham, Plaintiff Martin, Margaret Atwood, Dan Brown, and others about their works, as well as prompts that promise to help customers ‘Craft Bestselling Books with AI.’

They claimed that OpenAI could have trained their LLMs exclusively on works in the public domain or paid authors “a reasonable licensing fee” but chose not to. Authors feel that without their copyrighted works, OpenAI “would have no commercial product with which to damage—if not usurp—the market for these professional authors’ works.”

“There is nothing fair about this,” the authors’ complaint said.

Their complaint noted that OpenAI chief executive Sam Altman claims that he shares their concerns, telling Congress that "creators deserve control over how their creations are used” and deserve to “benefit from this technology.” But, the claim adds, so far, Altman and OpenAI—which, claimants allege, “intend to earn billions of dollars” from their LLMs—have “proved unwilling to turn these words into actions.”

Saunders said that the lawsuit—which is a proposed class action estimated to include tens of thousands of authors, some of multiple works, where OpenAI could owe $150,000 per infringed work—was an “effort to nudge the tech world to make good on its frequent declarations that it is on the side of creativity.” He also said that stakes went beyond protecting authors’ works.

“Writers should be fairly compensated for their work,” Saunders said. "Fair compensation means that a person’s work is valued, plain and simple. This, in turn, tells the culture what to think of that work and the people who do it. And the work of the writer—the human imagination, struggling with reality, trying to discern virtue and responsibility within it—is essential to a functioning democracy.”

The authors’ complaint said that as more writers have reported being replaced by AI content-writing tools, more authors feel entitled to compensation from OpenAI. The Authors Guild told the court that 90 percent of authors responding to an internal survey from March 2023 “believe that writers should be compensated for the use of their work in ‘training’ AI.” On top of this, there are other threats, their complaint said, including that “ChatGPT is being used to generate low-quality ebooks, impersonating authors, and displacing human-authored books.”

Authors claimed that despite Altman’s public support for creators, OpenAI is intentionally harming creators, noting that OpenAI has admitted to training LLMs on copyrighted works and claiming that there’s evidence that OpenAI’s LLMs “ingested” their books “in their entireties.”

“Until very recently, ChatGPT could be prompted to return quotations of text from copyrighted books with a good degree of accuracy,” the complaint said. “Now, however, ChatGPT generally responds to such prompts with the statement, ‘I can’t provide verbatim excerpts from copyrighted texts.’”

To authors, this suggests that OpenAI is exercising more caution in the face of authors’ growing complaints, perhaps since authors have alleged that the LLMs were trained on pirated copies of their books. They’ve accused OpenAI of being “opaque” and refusing to discuss the sources of their LLMs’ data sets.

Authors have demanded a jury trial and asked a US district court in New York for a permanent injunction to prevent OpenAI’s alleged copyright infringement, claiming that if OpenAI’s LLMs continue to illegally leverage their works, they will lose licensing opportunities and risk being usurped in the book market.

Ars could not immediately reach OpenAI for comment. [Update: OpenAI’s spokesperson told Ars that “creative professionals around the world use ChatGPT as a part of their creative process. We respect the rights of writers and authors, and believe they should benefit from AI technology. We’re having productive conversations with many creators around the world, including the Authors Guild, and have been working cooperatively to understand and discuss their concerns about AI. We’re optimistic we will continue to find mutually beneficial ways to work together to help people utilize new technology in a rich content ecosystem.”]

Rachel Geman, a partner with Lieff Cabraser and co-counsel for the authors, said that OpenAI’s "decision to copy authors’ works, done without offering any choices or providing any compensation, threatens the role and livelihood of writers as a whole.” She told Ars that "this is in no way a case against technology. This is a case against a corporation to vindicate the important rights of writers.”

I don’t see how this could work.

ChatGPTs output is not a derivative of a specific novel.

@DogMuffins

As I understand it, it’s not about the output it’s about the input.

Same basic principle as why universities don’t simply give students a pirated copy of the entire textbook.

Same principle why Google can index pretty much all books in existence. They were sued over this and won. Same thing will happen here.

As long as these models are not providing the copyrighted material to their users they should be safe.

@Akisamb yes I think you’re right.

That one was really interesting too. It has put a few more limits on its full text since those days, but I don’t know how much that was a result of the suit.

The authors from my country tried to have a group lawsuit against Google because within my country, if your books are in public libraries, then you get yearly compensation based on how many copies are in circulation.

But, Google and America are both a lot more rich and powerful than a handful of authors from New Zealand, so I don’t know what they though they could achieve.

Universities giving away pirated textbooks is output.

@hypelightfly It’s input into the students’ brains.

And the very concept of “pirated” textbooks is itself monstrous. Textbooks should be free, in universities, schools, and everywhere. I can see some arguments for copyright maybe possibly being somewhat beneficial for artists (with reasonable limits… for five or maybe even ten years, say, and most definitely no longer than the author’s lifespan, and of course not applicable to companies), but copyrighting learning tools can only be harmful to society.

Hmm… if that were true then why would they be expecting $150k per book?

My understanding us that in copyright infringement the liability is the amount of income you have deprived the author of. If you’ve only copied 1 book and not produced derivative works then the loss is the value of the book.

@DogMuffins

This is why it’s such an interesting case. We think of the LLM as one entity, but another way of looking at it is that it’s a lot of iterations.

The pirated book text files that are doing the rounds with the AI seem to be multiple iterations as well.

One $150 textbook x 1,000 students = $150k.

Even with public libraries, they pay volume licenses for ebooks based on how many “copies” can be lended simultaneously per year. It’s not considered as the library owning one copy when digital files are being lent.

Sorry mate this is just daft.

Did you read the article? Most of it is about derivative works.

They’re trying to claim that they have been financially harmed by the unauthorised use of their work.

Even if LLMs are separate iterations you could train multiple LLMs with one copy of the book - the library is not loaning multiple copies simultaneously.

@DogMuffins

Correct me if I’m wrong but I was under the impression they don’t yet have a derivative work that’s close enough to clain plagiarism. Without that, they don’t have a leg to stand on.

This is the only part I think could potentially hold water:

As for libraries:

I’m not sure why you’re saying that.

With ebook platforms the business model completely depends on the concept of multiple copies. This isn’t my opinion, it’s just a fact about how they bill libraries. Random stats.

Even with physical copies libraries sometimes buy several (and in my country publishers get special compensation based on how many copies are in public libraries per year)

deleted by creator

It may not calculate well mathematically, if not factually at all, but ChatGPT can sure as hell wax poetically with some direction and input.

But the best I can say is that, for now, ChatGPT should be only be relegated to Fanfics, tbh, lmao…