Startup in a rented house in a residential neighborhood

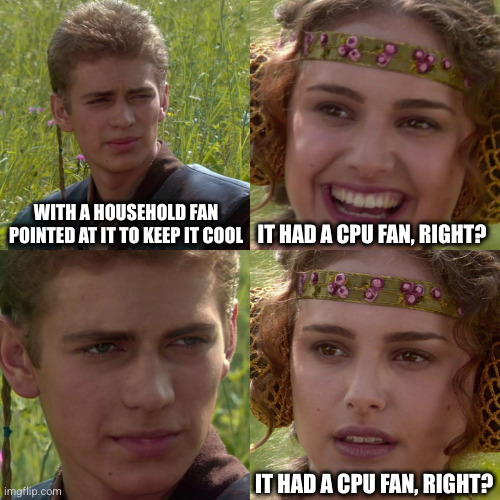

“Router” was an old PC running Linux with a few network cards, with no case, with a household fan pointed at it to keep it cool

Loose ethernet cables and little hubs everywhere

Every PC was its own thing and some people were turbo nerds. I had my Linux machine with its vertical monitor; there were many Windows machines, a couple Macs, servers and 2 scrounged Sun workstations also running Linux

No DHCP, pick your own IP and tell the IT guy, which was me, and we’ll set you up. I had a little list in my notebook.

It was great days my friends

We went out of business; no one was shocked

with a household fan pointed at it to keep it cool

It had a CPU fan, right?

How??

Oh, you’re not OCP. Funny though.

I kind of want to work there though.

It was the best of times, it was the worst of times. I turned in a time card once that had over 24 hours of work on it in a row. The boss was dating a stripper, and she would sometimes bring stripper friends to our parties and hangouts. We had ninja weapons in the office. The heat was shitty, so in the winter we had to use space heaters, but that would overload the house’s power which would cause a breaker to blow which obviously caused significant issues, so a lot of people would wear coats at their desks in the winter, but that obviously doesn’t do much for your typing fingers which was an issue. I frequently would sleep in the office on the couch (a couple of people were living in bedrooms in the upstairs of the house).

Like I say, it’s not surprising that we went out of business. It was definitely pretty fuckin memorable though. Those are just some of the stories or right-away memorable pieces off the top of my head.

Removed by mod

Source control relying on 2 folders: dev/test and production. Git was prohibited due to the possibility of seeing the history of who did what. Which made sense in a twisted way since a previous boss used to single out people who made mistakes and harras them

When you lift up the red flag and there are more red flags underneath.

My current company has a script that runs and deletes files that haven’t been modified for two years. It doesn’t take into account any other factors, just modification date. It doesn’t aks for confirmation and doesn’t even inform the end user about.

You should write a script to touch all the files before their script runs.

Thought about it but I use modification date for sorting to have the stuff I’ve recently worked on on top. I instead keep the files where the script isn’t looking. The downside is they are not backed up so I might potentially lose them but if I don’t do that, then I’ll lose them for sure…

Office Depot. They are still using IBM machines from the 90s with receipt printers the size of a shoebox.

deleted by creator

Is ECO an Ed change order?

deleted by creator

A company making signage and signal lights for road construction, with 15 employees. Their former IT guy had switched all of their PC’s to Linux for ideological reasons and to save money.

Then they found out that they had a long term contract for an accounting software that housed all their customer and billing data, only ran on Windows and required a server-client model.So they hauled in the boss’s private laptop which ran Windows 7, and installed both the server role, database and client software on it. When his employees needed to access the accounting software, the boss had to stop what he was doing and grant them full access to his laptop via teamviewer. When the boss’s laptop was off or he was on vacation, there was no way to access any price info, customer contact info, or financial data (This was during Covid when everyone was working from home).

The laptop was set up to back up (using Windows 7’s integrated backup tool) to an external drive which wasn’t attached and no one remembered ever existing.

The Linux server (which was actually a gaming PC) was running and attached to an MCU when my company surveyed their infrastructure, but no one (including the former IT guy) knew the correct root password, and we never found out what it was even doing.

This is surely the worst of all.

Using Filezilla FTP client for production releases in 2024 hit me hard

I must have missed that one, what’s going on with Filezilla?

Filezilla itself is not the problem. Deploying to production by hand is. Everything you do manually is a potential for mistakes. Forget to upload a critical file, accidentally overwrite a configuration… better automate that stuff.

Wait so the production release would consist of uploading the files with Filezilla?

If you can SSH into the server, why on earth use Filezilla?

Are you a software developer?

I started a job at a university department. A previous admin had a habit of re-purposing desktop machines as servers. There were at least a dozen of them. The authentication server for the whole department was on an old Dell desktop. All of the partitions were LVM volumes, and the volume group consisted of 3 physical volumes: The internal SATA drive, a bare SATA drive in an external USB cradle, and an external USB SSD.

This is why we drink.

I’ve often had the impression that universities are the best places to cut your teeth in IT. Even though the pay isn’t great, the environments are said to be some of the most complex you’ll encounter. Any credence to that?

I think that there’s something to that, at least in the case of large universities which are divided into many, many organizational units. They also offer student jobs, which allow good opportunities for learning.

The recent Falcon cock up?

I actually disagree. I only know a little of Crowdstrike internals but they’re a company that is trying to do the whole DevOps/agile bullshit the right way. Unfortunately they’ve undermined the practice for the rest of us working for dinosaurs trying to catch up.

Crowdstrike’s problem wasn’t a quality escape; that’ll always happen eventually. Their problem was with their rollout processes.

There shouldn’t have been a circumstance where the same code got delivered worldwide in the course of a day. If you were sane you’d canary it at first and exponentially increase rollout from thereon. Any initial error should have meant a halt in further deployments.

Canary isn’t the only way to solve it, by the way. Just an easy fix in this case.

Unfortunately what is likely to happen is that they’ll find the poor engineer that made the commit that led to this and fire them as a scapegoat, instead of inspecting the culture and processes that allowed it to happen and fixing those.

People fuck up and make mistakes. If you don’t expect that in your business you’re doing it wrong. This is not to say you shouldn’t trust people; if they work at your company you should assume they are competent and have good intent. The guard rails are there to prevent mistakes, not bad/incompetent actors. It just so happens they often catch the latter.